What Is an AI SDR? A Practical, No-Hype Explanation

Introduction — Why “AI SDR” Became a Thing at All

If you sit with any VP of Sales long enough, the conversation eventually circles back to the same pressure: the top of the funnel feels heavier every year. SDR teams are being told to research deeper, personalize smarter, respond faster, and hit higher quotas — all while managing inboxes that look like Times Square billboards. It's not uncommon to hear about SDRs cycling out in under 12 months, or watching a team of six churn through hundreds of leads a week just to book a handful of qualified meetings.

- Higher research and personalization expectations

- Growing responsiveness demands from inbound leads

- Cluttered inbox environments that reduce reply visibility

- SDR burnout and short average tenure cycles

The frustration isn't that SDRs aren't working hard. The frustration is that the job has quietly expanded behind the scenes. Five years ago, writing a decent outbound sequence in Outreach or Salesloft and keeping Salesforce tidy was considered competent execution. Today, SDRs are expected to monitor intent data from tools like G2, react to website activity in real time, chase inbound demo requests before they go cold, and personalize at a level that wasn't even mainstream in 2018. That's before we even talk about follow-ups.

- New data sources (G2, website behavior, product usage)

- New response expectations (real-time vs. daily)

- New personalization standards (role, context, timing)

- More follow-ups across more channels

What changed is not just software. It's buyer behavior. Prospects answer slower, switch channels mid-conversation, and expect near-instant replies, especially in inbound or product-led environments. Companies selling into mid-market SaaS feel this particularly hard; if a lead fills out a "Talk to Sales" form at 10:37 AM, they'll accept a competitor's call by 10:43 if they hear nothing back. AEs see it, SDR managers see it, and revenue leaders definitely see it when pipeline reviews start dragging.

This environment is what gave rise to the term "AI SDR." Not as a buzzword, not as a robot salesperson, and not as a magical replacement for humans — but as an attempt to solve a very real operational bottleneck at the top of the funnel. Teams didn't start asking for AI SDRs because they wanted AI. They started asking because the math on headcount, throughput, and timing stopped working the way it used to.

- The funnel got heavier

- Buyer patience declined

- SDR bandwidth plateaued

- Automation alone couldn’t fill the gap

Before getting into definitions or mechanics, it's worth acknowledging that this shift didn't happen in a vacuum. The combination of buyer impatience, GTM tool overload, and SDR burnout created a gap that pure automation couldn't close. At some point, someone had to build systems that could handle the parts of sales development humans weren't designed to scale — and that's where the idea of an "AI SDR" actually started to take form.

What an AI SDR Actually Is (And What People Get Wrong)

The term "AI SDR" gets thrown around a lot, and most of the definitions are either too generous or too simplistic. If you ask a founder, they might describe it as a bot that books meetings. If you ask a sales leader, they'll tell you it's software that helps with outbound. If you ask a product marketer, you'll probably get a slide mentioning NLP, LLMs, and a diagram with arrows pointing at Salesforce.

- Founders frame it as automation

- Sales leaders frame it as outbound assistance

- Marketers frame it as AI infrastructure

The reality is more boring and more useful: an AI SDR is software that handles the parts of sales development that depend on timing, detection, and follow-up rather than persuasion. It isn't trying to be a full sales rep, and it definitely isn't trying to close deals. Its job is to keep the top of the funnel warm, responsive, and qualified so humans don't have to fight fires all day.

- It detects signals (visit, click, intent, usage)

- It reacts instantly (follow-up, clarify, qualify)

- It keeps leads warm until humans engage

It helps to look at how people misuse the label. A generic chatbot that pops up on a website is not an AI SDR. A sequencing tool blasting outbound campaigns through Outreach or Reply.io is not an AI SDR. A VA scraping LinkedIn and Apollo isn't one either. Those tools execute instructions. They don't decide when to act or who deserves attention. The AI part comes in when software starts making small decisions at the speed and scale humans can't match.

- Not chatbots

- Not sequencers

- Not scraping VAs

- Not just automation

A simple way to understand it is to think about the "dead air" moments in sales development — the periods where a human would act if they noticed something in time. A lead visits the pricing page twice in a day. A prospect clicks a calendar link but doesn't schedule. A demo request comes in on Saturday morning. A customer that churned last year suddenly shows up on your site again. Traditional SDR workflows ignore these because no one is watching around the clock. This is where an AI SDR earns its keep.

Some products in the space focus on inbound responsiveness, some focus on outbound prioritization, and some attempt both. There are vendors positioning themselves as "AI SDRs" (11x, Regie.ai, Outplay's variants), and there are companies building internal versions using tools like Clay, Zapier, and custom LLM logic hitting HubSpot APIs. The implementations differ, but the goal is the same: monitor, engage, and qualify without a human needing to stare at dashboards all day.

- Inbound-first variants

- Outbound-prioritization variants

- Hybrid/internal builds

This is also why companies that have already invested in structured top-of-funnel motion tend to adopt AI SDRs faster. If you're running Salesforce or HubSpot as your CRM, routing leads through LeanData or Chili Piper, and pushing outreach through Outreach, Salesloft, or Apollo, you already have the plumbing. Adding an AI SDR becomes a question of orchestration rather than reinvention. Teams using Junix or similar platforms lean into this because the system plugs into existing signals instead of trying to replace everything at once.

In short, an AI SDR is not a replacement for human SDRs. It's a layer that keeps the system awake when humans are busy, offline, or overwhelmed. It notices things people don't catch, acts faster than people can, and hands off at the right moment. That's a much less glamorous narrative than "AI replaces SDRs," but it's the one that actually shows up in real revenue environments.

Why the Traditional SDR Model Started Breaking

To understand why companies started looking at AI SDRs seriously, it's worth examining how the SDR job evolved over the last decade. In the mid-2010s, especially in SaaS, the SDR role was fairly repeatable. A manager could source a list from DiscoverOrg or ZoomInfo, load it into Outreach or Salesloft, personalize the first touch, and let a sequence carry the rest. Leads coming in from paid search or content went into Salesforce, where an SDR would respond within a few hours or days. Meetings were booked, pipeline was created, and the model scaled as long as headcount grew alongside it.

- Roles were predictable and sequence-driven

- Single-channel buying behaviors still worked

- Headcount growth = pipeline growth

That model started to crack as the volume of signals multiplied. Buyers began researching more quietly and more asynchronously. Product-led growth added free users who behaved like prospects but didn't follow traditional demo-request paths. Marketing teams layered tools like Clearbit Reveal, Hotjar, Mutiny, and G2 intent data into the stack without a clear owner. Suddenly, the SDR desk wasn't just handling outbound and inbound — it was expected to react to dozens of signals that didn't exist five years earlier.

- Buyers now research silently before engaging

- PLG created new user-led qualification paths

- Marketing tools added intent signals SDRs must react to

- No single owner for new data streams (marketing vs SDR)

The result wasn't just "more work." It was more different kinds of work. A single SDR might start the morning with a list of outbound accounts, get interrupted by three inbound forms, need to respond to a product usage spike in the PLG funnel, then find time to qualify a batch of G2 accounts showing "high intent" because marketing wants coverage. Add follow-ups, CRM notes, personalization research, and checking calendar gaps, and suddenly it's 5 PM and only half of the intended sequence went out.

- Outbound, inbound, PLG, and intent all converged on SDRs

- Context switching exploded (inbound → PLG → outbound)

- Follow-up and documentation suffered due to volume

Timing also became a liability. Leads no longer behaved like they did in 2015 when inbound volume was lower and buyers tolerated slower replies. A study by Chili Piper showed that the odds of converting an inbound demo request dropped by more than 50% if the first reply took longer than five minutes. HubSpot internal data showed similar decay curves. Yet most SDR managers still operated with 24-hour SLA expectations because human bandwidth couldn't reliably do better. That gap became expensive fast.

- Inbound conversion depends heavily on first-touch speed

- Market data shows conversion decay within minutes, not days

- SDR SLAs (24 hours) no longer matched buyer expectations

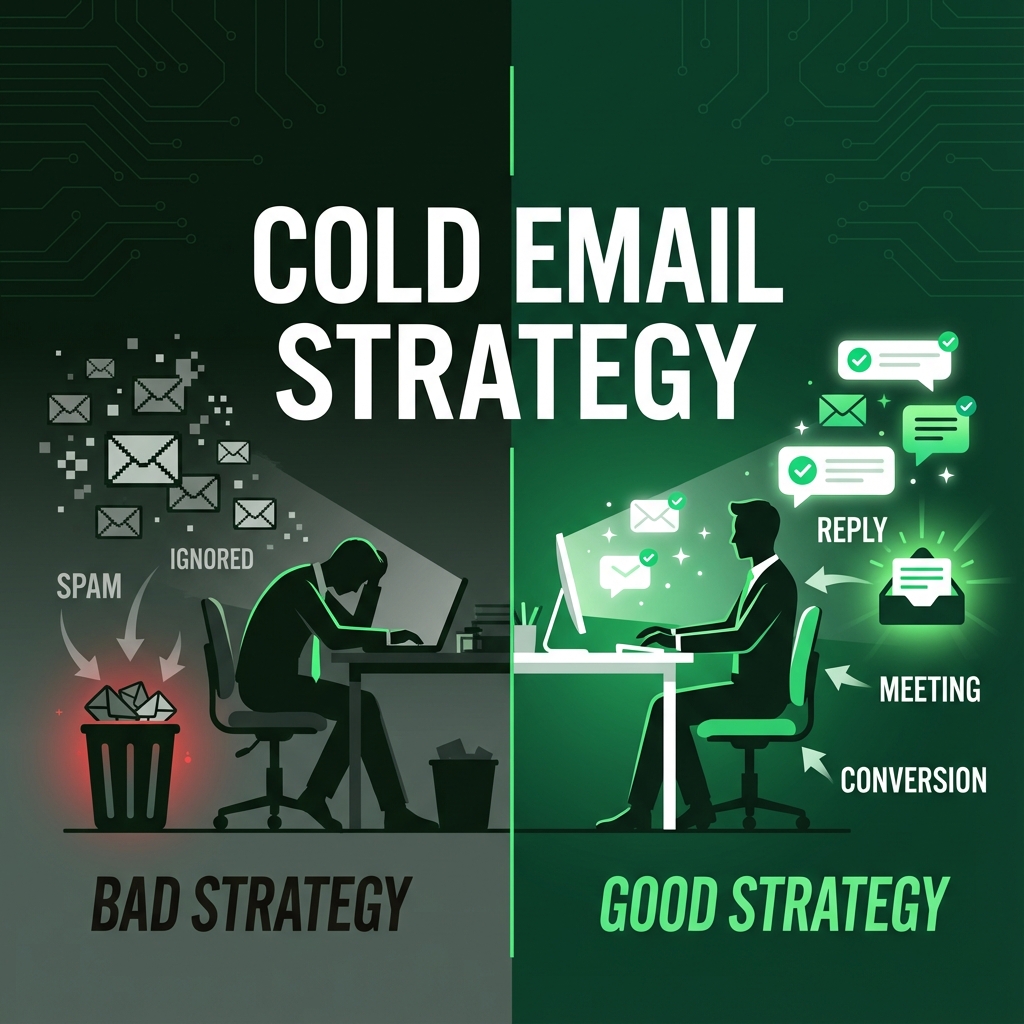

Outbound also lost some of its old predictability. The "spray, sequence, and pray" motion that worked with high-volume email domains has been choked by inbox providers tightening spam controls, filters getting more intelligent, and buyers getting more selective about replying to cold outreach. Many teams tried to compensate by asking SDRs to personalize emails manually, which increased research time per lead but didn't necessarily increase reply rates. When a rep spends 20 minutes on an account only to discover the prospect already signed with a competitor, the frustration is real.

- Email deliverability got harder due to new spam controls

- Buyers reply less to cold outreach and generic personalization

- Manual deep personalization raised time cost per lead

Managers responded the way most rational operators do: they added process. New dashboards, new rules, new SLAs, new definitions of MQL/SQL, new training for objection handling, new cadences for follow-ups. The intent was good, but the system got heavier. Salesforce admin time increased. AE handoffs got more complicated. SDR coaching turned into spreadsheet forensics. None of this made reps faster.

- Operational overhead increased across tools and processes

- Admin burden grew for SDRs and managers

- More process ≠ more pipeline

The other pressure point was turnover. SDR is one of the highest churn roles in SaaS, with average tenure now hovering around 11–14 months in many companies. By the time a rep is fully ramped, they're often either promoted or burned out. Institutional knowledge never compounds. Processes reset. Recruiting costs stay high. Every VP of Sales knows this pattern, and most have a Slack channel dedicated to celebrating every booked meeting just to keep morale up.

- SDR average tenure: ~11–14 months

- Ramp time eats into productive tenure

- Institutional knowledge rarely compounds

This combination — multi-signal environments, faster buyer expectations, fragile outbound channels, and short SDR tenure — created a structural mismatch between what companies expected from the role and what humans could realistically execute. It wasn't a matter of effort or talent. It was a matter of physics. You can't ask someone to react instantly, personalize deeply, qualify accurately, and multitask relentlessly across five data streams without something slipping.

AI SDRs didn't appear because someone in a lab thought it would be clever to automate sales. They appeared because the economic and operational math at the top of the funnel stopped working. When the funnel becomes a 24/7 signal machine instead of a linear pipeline, humans alone aren't enough to keep it organized. Something had to catch the signals humans were missing — and that's when the AI conversation stopped being theoretical and became operational.

How an AI SDR Actually Works (Day-to-Day Reality)

The easiest way to explain how an AI SDR works is to stop thinking about "AI" and look at what actually happens inside a modern SDR desk on an average Tuesday. A lead views the pricing page twice, but never fills out a form. A free user from a mid-market account activates a feature that usually indicates expansion potential. Someone from a target enterprise clicks a calendar link, starts to book a meeting, then drops off. A competitor's customer visits the blog. A demo request comes in on Saturday afternoon. In most teams, these signals live in different tools and only get acted on if the right human sees them at the right moment.

- Buyer signals come from multiple disconnected tools

- Most signals are time-sensitive

- Humans rarely see all signals in time

An AI SDR's job is to watch for this activity, make small decisions based on patterns, and act fast enough that the opportunity doesn't disappear. It pays attention to inputs that humans would otherwise miss or ignore due to bandwidth: CRM updates in HubSpot or Salesforce, product usage inside a PLG motion, website behavior from Clearbit or Segment, intent data from G2 or Bombora, and of course direct responses through email or chat. None of these signals are magic on their own, but when stitched together, they paint a picture of interest or readiness.

- Input sources include CRM, PLG, web, and intent data

- AI connects signals and infers readiness

- Speed matters more than cleverness

When the system notices something worth reacting to — for example, a lead visiting the pricing page right after reading a comparison post — it can send a message, ask qualifying questions, or nudge the prospect toward a meeting link without waiting for someone to manually check an analytics dashboard. This isn't about being clever; it's about not letting signals die quietly at the edge of the funnel.

Outbound is similar, but inverted. Instead of watching for inbound intent, the system watches for engagement. If you push an outbound sequence to 500 contacts through Apollo or Salesloft, the system pays attention to who opened, clicked, forwarded, or engaged with assets. Instead of hammering everyone with the same predictable cadence, it reorders who deserves attention next. Humans can do this, but not at the velocity required when thousands of touches are happening weekly.

- Inbound = detect intent and react

- Outbound = detect engagement and reprioritize

- Humans cannot re-sort thousands of prospects in real time

Conversations also get handled differently. Instead of pushing everything to a rep manually, the system can handle the first mile — confirming interest, asking for context, checking qualification basics like tooling, size, or timelines, and capturing meeting availability. Most SDR managers have seen Slack screenshots of AEs complaining about low-quality handoffs; letting software tighten the filter improves confidence downstream.

- AI can run first-pass qualification

- It can confirm genuine interest

- It reduces AE complaints about junk meetings

No serious team expects an AI SDR to negotiate pricing or multi-thread an enterprise deal. That isn't the point. The value shows up in the dead space before humans get involved. A tool like Junix, for example, sits on top of CRM, product, and intent data and specializes in catching this early motion. Other vendors plug into chat. Others focus on outbound prioritization. The mechanics vary, but the pattern is the same: software takes over the parts of the job that are 24/7 and signal-based, not the parts that depend on relationships.

Seen up close, an AI SDR isn't futuristic at all. It's a safety net and a speed boost at the edge of the funnel — the area where timing matters more than charisma. You don't notice it when it's working well because things just move faster. You only notice when it's not there, and a lead goes cold before a rep ever saw it.

Where AI SDRs Work Well — And Where They Fall Apart

Most technologies look cleanest in marketing pages and demo reels. The reality shows up once you deploy them inside a sales org with real quotas, imperfect data, weekend leads, channel conflict, and AE calendars that are already jammed. AI SDRs are no different. They thrive in certain conditions, struggle in others, and absolutely misfire if you treat them like magical replacements for headcount.

- Marketing claims rarely reflect real sales org complexity

- AI behaves differently under quotas and messy data

They work especially well in inbound-heavy environments. If you're running paid demand through LinkedIn and Google, pushing product-qualified leads from a PLG motion, or getting consistent demo requests through the site, an AI SDR can compress time-to-first-response down to seconds. That's not a marketing claim — it shows up in the metrics. Companies that route inbound through software like Chili Piper or Cal.com already know the importance of milliseconds. AI SDRs take that responsiveness further by adding the first layer of qualification and context gathering before a rep ever touches the conversation.

- Inbound-heavy motions benefit most (paid, organic, PLG)

- Speed-to-lead becomes measurable and defensible

- AI adds qualification before human touch

They also shine when outbound volume is high but attention is uneven. A mid-market SDR team sending 2,000–4,000 outbound touches a week simply cannot follow up on every micro-signal manually. Humans drift toward the accounts they're most familiar with. Software doesn't. When someone opens an email at 11:45 PM, clicks a link three times, or revisits the site quietly, an AI SDR can act without waiting for morning standup. This is particularly valuable in orgs targeting North America from EMEA or APAC, where time zones make manual coverage impossible.

- Outbound = high volume, low attention → ideal for AI

- Time zone gaps become less damaging

- Micro-engagements get captured instead of ignored

Where AI SDRs struggle is in environments with unclear ICPs or messy CRM data. If the team can't articulate who they should be talking to — or if Salesforce is full of duplicates and outdated lead statuses — the software ends up amplifying noise instead of making sense of it. Vendors don't like admitting this, but every sales leader who has survived a messy CRM migration understands the pain. Good AI depends on clean plumbing.

- Messy CRMs = bad AI outcomes

- Unclear ICP = wasted touches

Another weak spot is the extremely high-touch enterprise motion, especially when the first interaction requires multi-threading across legal, finance, ops, and buying committees. In those motions, the value of an SDR often has less to do with outreach and more to do with internal choreography. No AI tool is going to navigate procurement politics or wrangle stakeholders on a Fortune 500 account. Those deals move at human speed, and that's fine.

Finally, AI SDRs fall flat when companies adopt them for the wrong reason — usually to "save on headcount" or "automate the whole funnel." That thinking ignores what SDRs are actually good at: reading the room, sensing tone, adapting on the fly, and escalating when the conversation gets interesting. Software is not a replacement for those skills. It's an accelerant for everything that happens before them.

- Software accelerates “pre-conversation” work

- Humans handle persuasion, nuance, and escalation

Teams that get the most out of AI SDRs tend to think in terms of division of labor. Software handles timing and detection. Humans handle persuasion and strategy. The shift isn't about replacing reps — it's about making sure reps aren't wasting their best hours chasing cold signals or responding late to warm ones.

What Actually Improves When Teams Get This Right

Most discussions around AI SDRs default to high-level benefits like "scale" or "efficiency," which sound good but don't help anyone make decisions. The reality is less glamorous but more useful: teams don't buy AI SDRs for innovation points, they buy them because a few critical parts of sales development start working the way they were always supposed to.

The first change most teams notice is response speed. In inbound and PLG-driven funnels, timing is the currency. A demo request that sits for 45 minutes at 2 PM on a weekday has a materially different conversion probability than one responded to within two minutes. Tools like Chili Piper and Cal.com have already conditioned buyers to expect instant scheduling. AI SDRs extend that reflex into qualification and follow-up, which matters when leads arrive at odd hours or from time zones outside SDR coverage.

- Inbound and PLG funnels are highly timing-sensitive

- Buyers now expect instant scheduling and acknowledgment

- AI SDRs compress response times into minutes or seconds

The second improvement shows up in SDR calendars and AE handoffs. When AI takes the first cut at filtering out no-shows, tire-kickers, and misaligned accounts, reps waste less time on conversations that were never going to turn into revenue. One CRO at a mid-market SaaS company told us their AEs stopped complaining about "junk meetings" after routing inbound through an AI SDR layer because only two out of ten meetings ended up disqualified during the first five minutes — compared to five out of ten before.

- Meeting quality improves when early filtering is automated

- AE trust increases when junk meetings decrease

- SDR calendars shift toward higher-value conversations

The third shift is prioritization. Humans tend to drift toward accounts that feel familiar or easy. Software doesn't have that bias. When the system starts ranking leads based on observed behavior — opens, clicks, page views, product usage, intent — the order of operations changes. Outbound sequences still go out, but follow-ups start landing on the right people instead of the loudest or most obvious.

These improvements aren't abstract. They show up in actual metrics that people care about:

- Time-to-first-response drops from hours to minutes (or seconds)

- Meeting quality improves because low-intent leads get filtered early

- Pipeline coverage expands to accounts previously ignored due to bandwidth

- AE trust in SDR pipeline increases, improving cross-team morale

There's also a quieter benefit that doesn't get enough attention: consistency over time. SDR performance varies with ramp cycles, hiring waves, and burnout phases. AI doesn't care if it's the last week of the quarter or the day before a rep resigns. It keeps doing the boring parts of the job reliably. That stability removes some of the volatility that VPs of Sales complain about during QBRs when the difference between a good quarter and a bad one comes down to a couple of reps getting sick or promoted at the wrong time.

Finally, there's the operational learning aspect. When the system observes how leads behave across channels, it starts surfacing patterns that humans never had time to analyze. Teams begin asking different questions: Which signals actually correlate with meetings? Which industries convert at higher rates after reading comparison content? Which outbound personas click but never respond? Data teams can answer these questions manually, but it's rarely a priority unless someone is screaming about pipeline.

- Operational patterns emerge from cross-channel data

- Teams can analyze signal → outcome relationships

- Insights influence targeting, content, and outbound strategy

When done well, the lift isn't in automation for its own sake — it's in tightening the loop between signal, action, and outcome. AI SDRs turn that loop into something closer to real-time instead of weekly dashboards or end-of-quarter postmortems. That's where tools like Junix quietly earn their seat: not by replacing human SDRs, but by making sure reps aren't always reacting two steps too late.

When to Adopt — And When to Wait

Not every company needs an AI SDR today, and the fastest way to waste budget is to deploy one before the underlying motion is stable. The best adopters tend to share a few traits: they have more leads or signals than humans can reasonably process, they feel real pain around response speed or follow-up discipline, and they already run a stack where data moves cleanly between systems. That usually means Salesforce or HubSpot as the CRM, some routing or scheduling tooling, and at least one outbound channel in motion.

A common scenario is the post–Series A or post–Series B SaaS company that has moved beyond founder-led sales but hasn't fully staffed out a large SDR team. They're spending money on paid demand, SEO, or PLG, and inbound leads are arriving faster than reps can triage them. Another pattern is the mid-market platform that floods Salesloft or Apollo with outbound sequences but loses warm replies because no one notices the second or third email open on a Saturday. In those environments, adding software to catch the "edges" of the funnel makes sense.

- Young SaaS scaling beyond founder-led sales

- Inbound-heavy teams lagging on response speed

- Outbound-heavy teams missing micro-engagement signals

- Orgs with multi-channel signal overload (PLG + inbound + outbound)

Signs your team is ready look something like this:

- You have inbound volume but inconsistent response times

- Your SDRs are juggling inbound, outbound, PLG, and intent simultaneously

- Your CRM has clean owners for leads, contacts, and accounts

- Your AEs complain about junk meetings more than lack of meetings

- You already have signals you aren't acting on (G2, product usage, web visits)

Teams that struggle with AI SDR deployment often look different. Early-stage startups still figuring out ICP or pricing benefit more from humans doing the discovery themselves. If you're still conducting "founder calls" to learn the problem-space, automating the top of the funnel too early can cut off valuable learning. Another red flag is incoherent data. If HubSpot fields are outdated, owners don't trust the CRM, or half your MQLs live in spreadsheets, adding more software won't fix the plumbing. Tools like Junix work best when the inputs are stable enough that the system can make decisions without guessing.

- Messy or untrusted CRM data

- Undefined or evolving ICP

- Manual spreadsheets acting as the "real CRM"

It's also worth calling out the budget psychology. Some leaders evaluate AI SDRs as a headcount replacement — "could this let us hire fewer SDRs this year?" That framing usually disappoints because it misses where humans excel. SDRs aren't just outreach machines, they're context interpreters. They sniff out politics inside accounts, track competitor motion, and escalate nuance to AEs. Software doesn't do that. The more accurate question is whether humans are spending their time on the right parts of the job. If you're paying comp to have SDRs manually chase stale leads or watch product dashboards all afternoon, something is misallocated.

The teams that adopt well do one thing consistently: they define a clean division of labor. Software handles the monitoring and timing. Humans handle the meetings and strategy. Everything else is implementation detail.

How to Measure Success Without Fooling Yourself

Measurement is where a lot of AI SDR projects go sideways. Leaders default to activity dashboards because that's how SDR teams have been measured for a decade: how many emails went out, how many calls were made, how many touches per account. Those metrics matter for staffing models, but they tell you almost nothing about whether an AI SDR is doing its job. Software can always send more emails than a human. That is not a meaningful win.

The signal you're looking for is not volume. It's flow. Inbound leads moving faster through qualification. Outbound prospects getting followed up on when they actually show interest. AEs receiving cleaner meetings and spending less time disqualifying during the first five minutes of a call. If those behaviors aren't improving, the AI layer isn't adding value — it's just adding noise at computational speed.

- Flow over volume: how fast and clean leads move

- AE acceptance rate: % of meetings AEs consider qualified

- Signal reaction rate: % of signals that triggered action

Teams that understand this tend to track a different set of metrics. They watch time-to-first-response on inbound leads, especially across weekends or non-core hours. They monitor the percentage of SDR-booked meetings that AEs accept as qualified. They measure how many high-intent signals triggered action versus how many were ignored. None of these require advanced analytics; they require discipline and someone who cares about the experience after the meeting is booked.

It helps to call out a few metrics that look good on dashboards but don't correlate with revenue:

- Total outbound volume: always goes up when you add software

- Email open rates: affected by Apple Mail privacy more than interest

- Demo requests: meaningless if qualification happens after booking

- Chat engagements: easily inflated by accidental clicks

A CRO at a PLG infrastructure company told us they originally declared their AI SDR rollout a win because email throughput doubled. Six weeks later, AEs were rejecting half the meetings as unqualified and complaining in Slack that they were "drowning in noise." When they changed the scoreboard to track AE acceptance rate and median time-to-first-touch, the picture reversed — outbound volume dropped, but pipeline quality improved and the AE calendar stabilized. That's the game.

This is also where platforms like Junix earn trust with ops and leadership. Instead of just firing off sequences, they anchor to CRM realities. Meetings are booked into the right owner. Context is preserved. Qualification data is captured. If a team runs Salesforce or HubSpot with Chili Piper for routing and Cal.com for scheduling, adding Junix on top creates a closed loop: signals in, actions out, data back to the source of truth. That's what makes measurement possible instead of theoretical.

If you want a simple sanity check, ask one question at the end of every month: "Did our humans spend more time in good conversations and less time chasing ghosts?" If the answer is no, the tooling isn't doing its job.

Conclusion — A Calm Way to Think About AI SDRs

The excitement around AI SDRs can make it sound like we're witnessing a revolution in sales, but anyone who has lived in a quota-carrying org knows that sales doesn't change in revolutions. It changes in increments. First reps stop chasing cold signals. Then inbound stops getting bottlenecked. Then AE calendars stop getting clogged with no-shows. Then operations stops firefighting handoffs. Ten months later, leadership wonders why the last two quarters felt smoother than the ones before. That's how this stuff actually lands in the real world.

- Sales teams adopt through increments, not revolutions

- Operational smoothness is the real long-term outcome

If you strip away the marketing, an AI SDR is just a way of handling the parts of sales development that humans aren't built for: watching for micro-signals, reacting at odd hours, filtering noise, and keeping the system warm. Humans still do the selling. The software just makes sure the right conversations happen instead of the wrong ones.

The teams that get this right don't replace SDRs. They make SDRs more valuable. They let people spend their best hours pushing deals forward, not staring at dashboards or copying notes into Salesforce. And they design their tooling stack so data flows cleanly instead of fracturing between product, marketing, and sales. In those environments, adding something like Junix or Sia isn't a futuristic leap — it's plumbing. It's the connective tissue between modern buyer behavior and the revenue systems built to respond to it.

For everyone else, the best advice is simple: don't adopt because of hype. Don't hold off because of pride. Look at your motion honestly. If your funnel is signal-heavy and responsiveness matters, software will help. If your funnel is still human-learned and founder-driven, talk to buyers yourself for a while longer. There's no medal for being first, and no penalty for being late. There's just pipeline, calendars, and conversations — and whichever mix produces revenue for your business is the right one.